(Update April 2024: When I originally wrote this blog post, the official Raspberry Pi distribution of Debian hadn’t been updated for Debian 12 and so I was still on Debian 11. They’ve since updated it, so I ran a trial run on my spare Raspberry Pi 4B+ and made a couple of minor changes to the Ansible playbooks, and flashing the “production” 4B+ to Debian 12 with the full Ansible setup was an absolute unqualified success! 🎉)

Back in 2017 when I first moved off the old and busted NinjaBlocks platform to a Raspberry Pi for my temperature sensor setup, I said:

[…] if the hardware itself died I’d be stuck; yes, it was all built on “open hardware” but I didn’t know enough about it all to be able to recreate it.

I definitely have no problems with the hardware now (and with my move to ESP32s and MQTT for the temperature sensors themselves it’s even simpler), but I recently realised that the software configuration on the Raspberry Pis was still a problem: the configuration and setup of everything had had a steady pace of organic updates and tweaks and if one of the SD cards died or had a problem and I had to reformat it, I’d have a hell of a time recreating it afresh. On the “main” Raspberry Pi 4B+ alone I would need to:

- Install all the various software packages (

vim, git, tmux, Docker, etc.)

- Add my custom shell configuration and bashmarks

- Install Chrony and configure it to allow access from the ESP32s

- Configure the drivers for the JustBoom DAC HAT and install and configure shairport-sync to allow for AirPlay to the big speakers in the lounge room

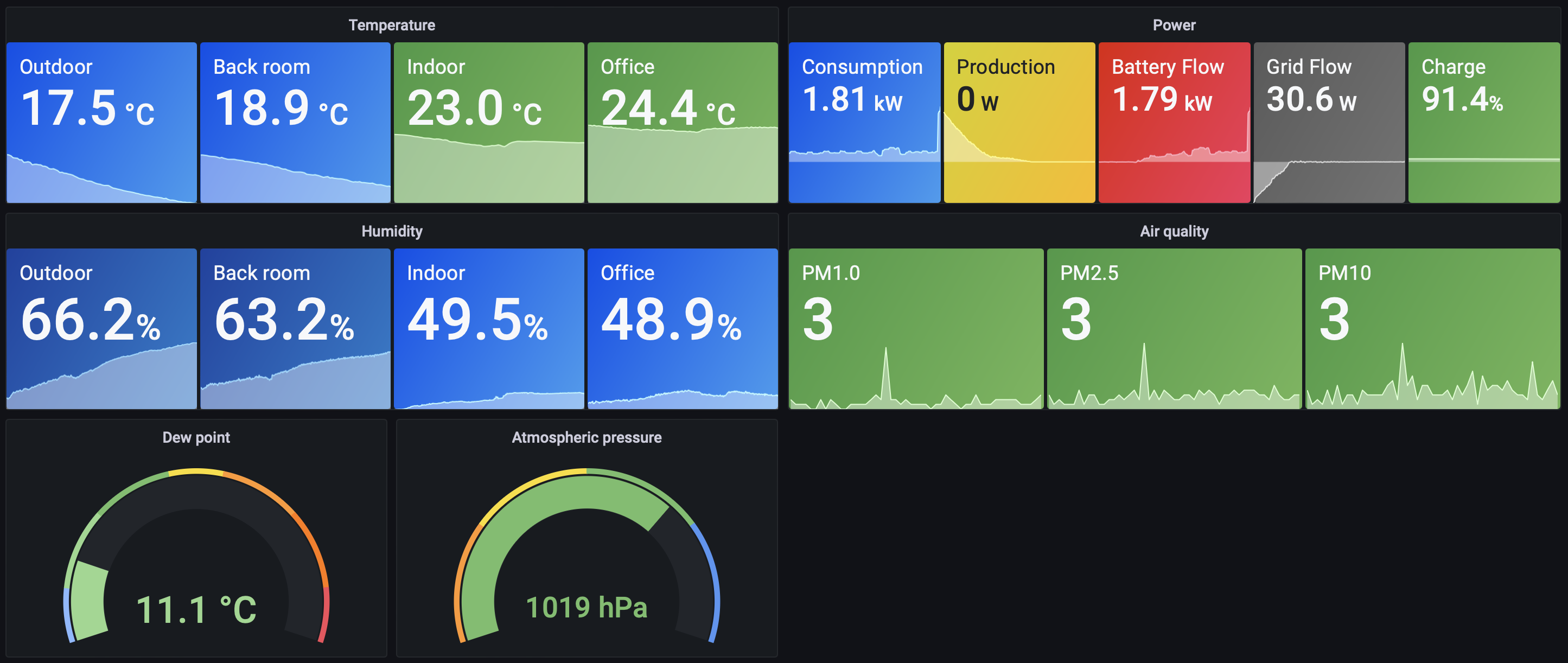

- Configure and run the Mosquitto Docker container to allow all my temperature, humidity, air quality, and power data to flow to all the various places it needs to go

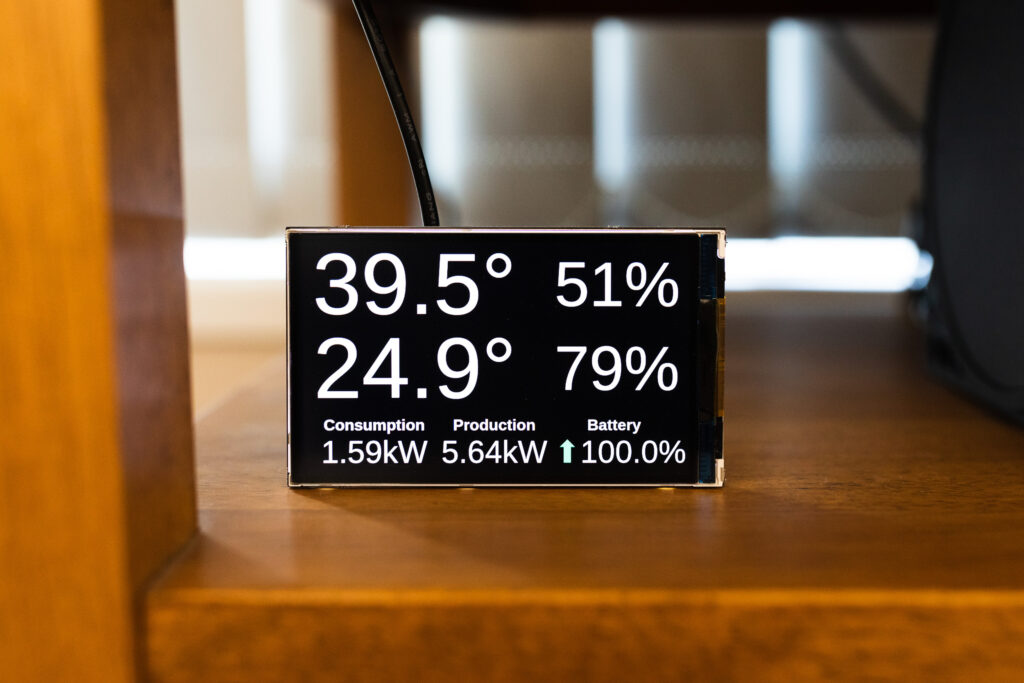

- Configure and run the pi-home-dashboard Docker container so the Raspberry Pi Zero Ws can display our little temperature dashboards

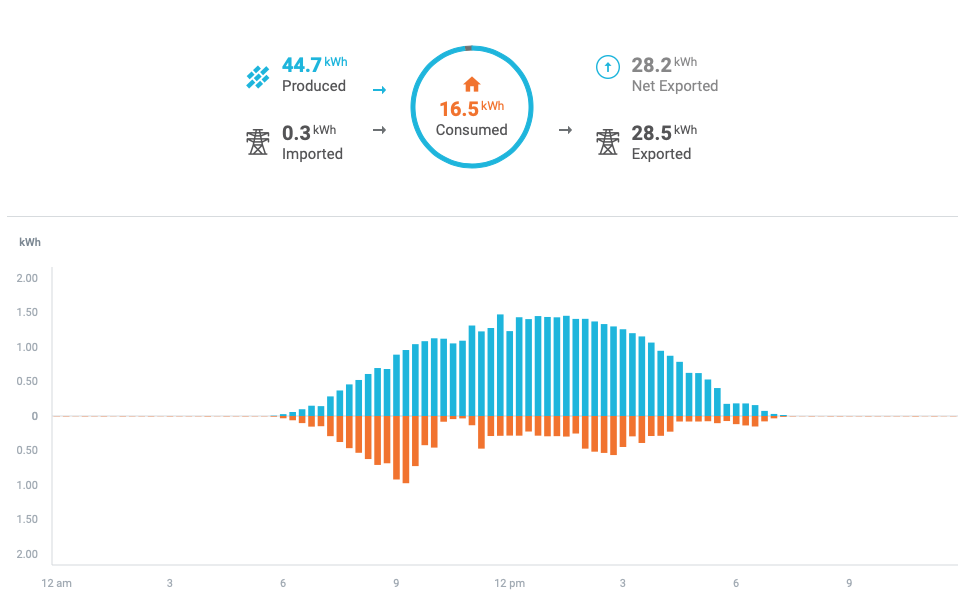

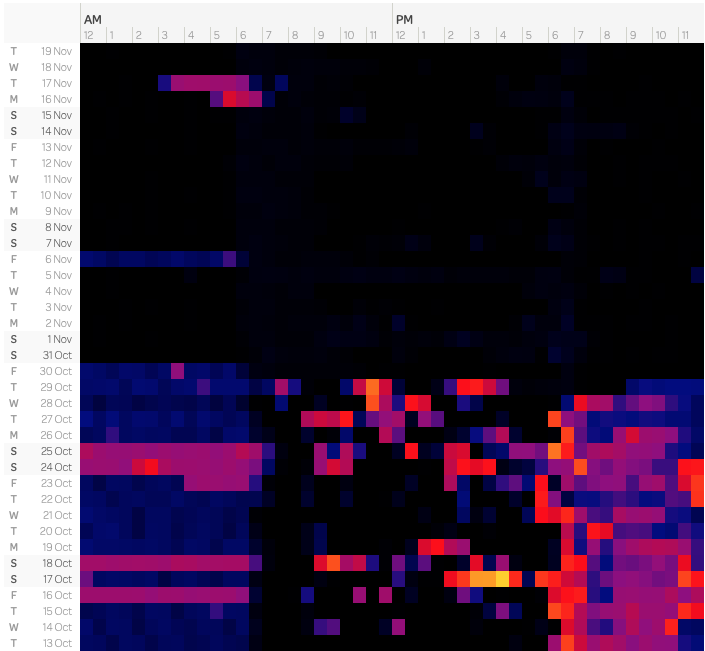

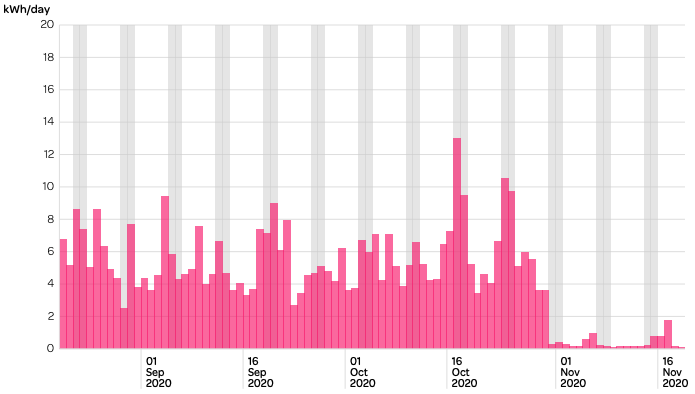

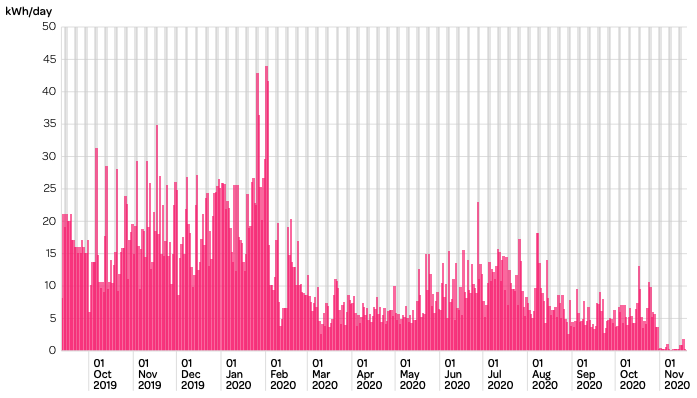

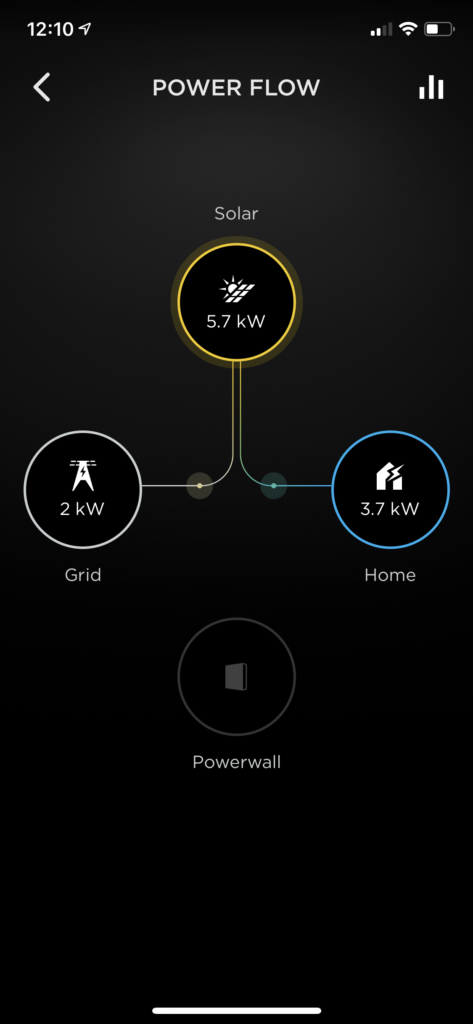

- Configure and run the powerwall-to-pvoutput-uploader Docker container so our power usage data can be both sent to PV Output and also be read by InfluxDB and the Raspberry Pi Zero W dashboard

In addition to all of that, the Raspberry Pi Zero Ws that display our little dashboards required a whole bunch of constant tweaks and changes to get them working properly (they run a full Linux desktop and display the dashboard in Chromium, and Chromium has an extremely irritating habit of giving a “Chromium didn’t shut down properly” message instead of loading the page you tell it to if there were basically any issues at all; fixing that required a whole lot of trial and error until I narrowed down the specific incantations to get it to stop).

Setting all of that back up from scratch would have been an absolute nightmare so I figured I should probably automate it in some fashion. And in addition, it means I would be able to keep future changes under version control as well so no matter what I’ve done I’d be able to restore it if necessary. At work we use a piece of software called Ansible to bring up the required software and configuration on the Amazon EC2 instances we run, so I thought that’d be a good place to start.

Even though it’s developed by RedHat and isn’t just some random open-source piece of software (although it is open-source), I still found the documentation to be not great in terms of explaining everything step-by-step and building on that knowledge in subsequent steps. But after a bunch of reading and trial and error, plus several weeks of getting it all working, I have my entire Raspberry Pi setup for all the Pis we have at home fully automated! I can wipe the SD card, reflash it with a fresh copy of Raspbian, then run Ansible and it gets everything installed and configured and working exactly how I need it.

I uploaded the whole shebang to GitHub to hopefully help others out as well. It’s obviously completely customised for our setup, but it at least gives a reasonable idea of how everything works.

It starts by creating an inventory, basically a list of the hostnames you want to run playbooks against (a “playbook” is a file that describes the list of steps to run in order to get to the desired state you need). Alongside that, you can group the hosts together so you can target a playbook to run on a specific set of hosts. For example, the server group only consists of the main Raspberry Pi 4B+ described above, but the dashboards consists of all three of the Pi Zero Ws that are running the dashboards.

One of the really handy things with Ansible is that you can set variables that will be reused in various places, and you can configure them for all hosts, or per group, or per individual host. I’m using a combination of those, and inside the server group it will actually look up items from within 1Password so I can commit the code to source control and not be storing secrets in it. You can also set variables per individual host as well, which I use to specify the dashboard URL that each of the Pi Zero Ws should load.

The playbooks themselves live in the playbooks directory, and they specify a set of hosts to run against, and a series of roles to run. The roles are reusable sets of tasks, so for example I run the initialisation role against all of the Raspberry Pis no matter what they’re ultimately going to be doing, for doing the initial things like setting the hostname and updating all the software packages, configuring Git, etc.

After the initialisation is done, the server playbook will run all the various steps to get Docker installed, NVM and Node.js installed, then get everything else configured and installed that needs to be configured and installed. Compare to the dashboards playbook that will also run the same initialisation steps, but then runs the dashboard role which installs the drivers for the HyperPixel display and various other things that need doing, and will configure the autostart file so on boot Chromium comes up with the correct URL depending on which Raspberry Pi it’s running on! The dashboard_url variable in that template file is set in the host_vars directory per specific hostname, so I can customise it for each one.

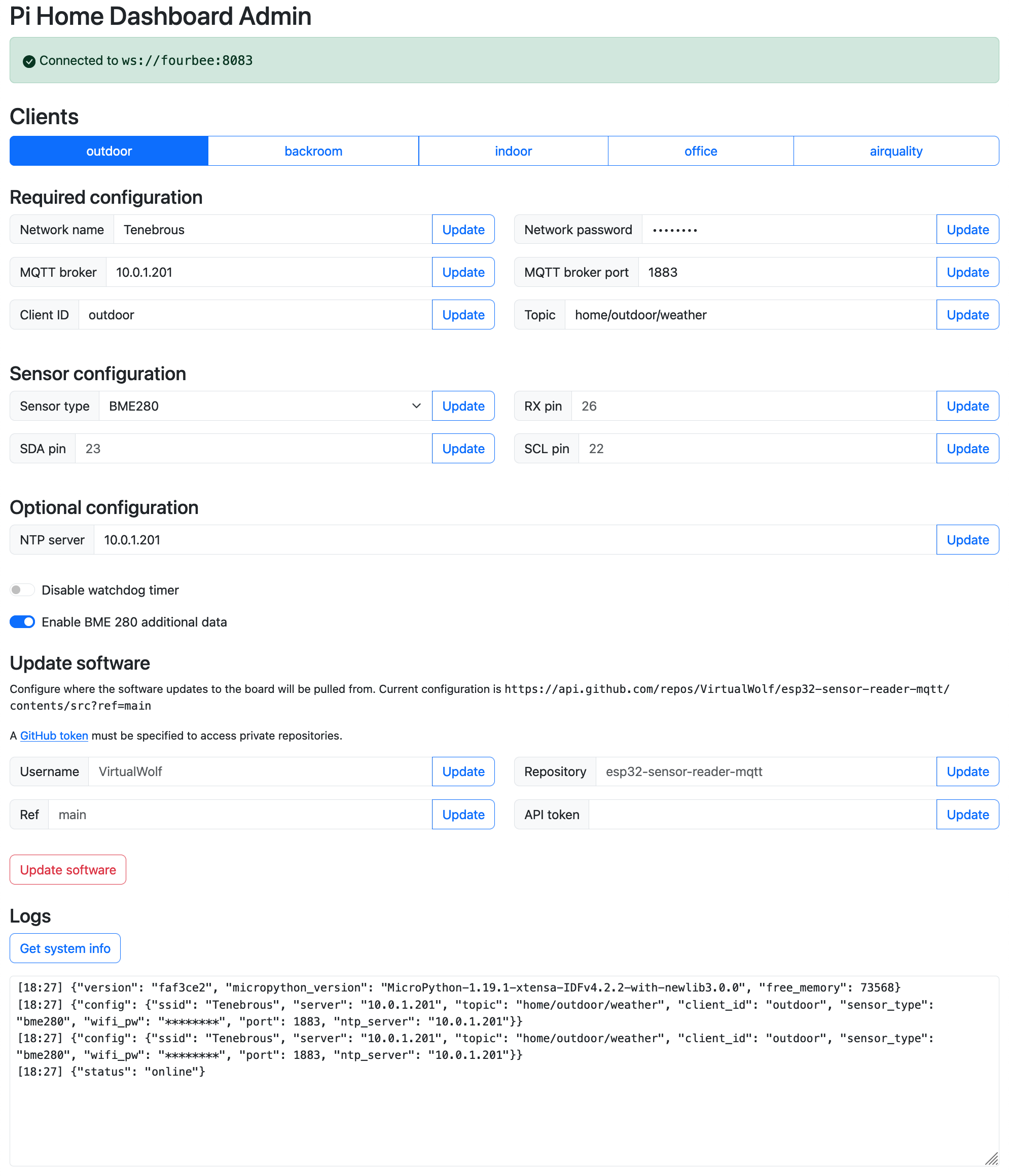

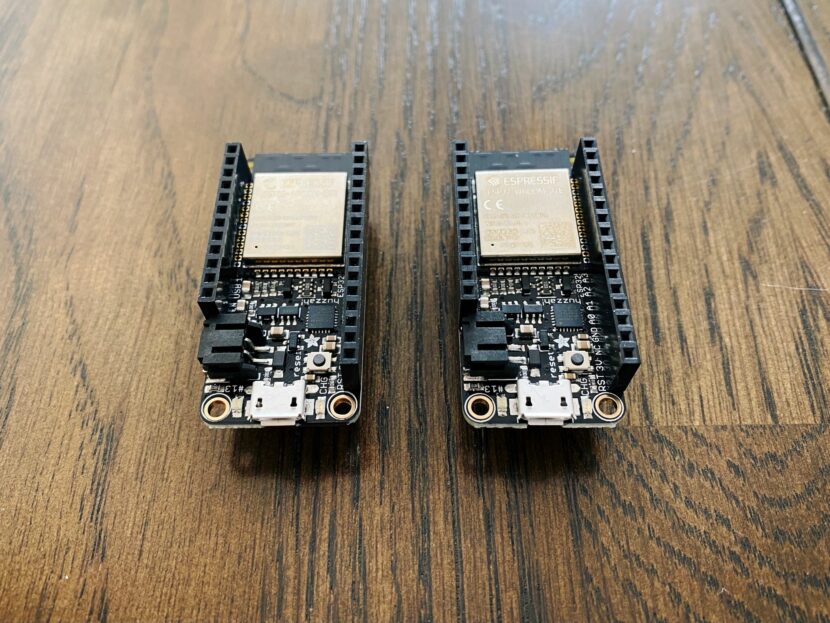

After my complete success here, I decided I wanted to do the same for when I needed to reflash my ESP32s, because previous it relied on me remembering to update a configuration file with the name of the ESP32 and I had definitely messed that up on a couple of occasions. That was relatively straightforwards as well, and I added it to my esp32-sensor-reader-mqtt repository (and included a playbook to just erase a board because I never did it quite often enough to remember what the specific steps were).

And then finally after that, I decided I should also automate my Linode setup. I’d posted back in 2019 about using Linode’s StackScripts to set everything up, but the problem with that is that you run it at the very beginning and your Linode is set up appropriately at that point, but any changes you make after that aren’t saved anywhere, so you’re essentially back to square one again. The final sentence in that blog post was this:

As long as I’m disciplined about remembering to update my StackScript when I make software changes, whenever the next big move to a new VM is should be a hell of a lot simpler.

But in news that will surprise nobody, I was not at all disciplined. 😅 The other problem is that you can’t test the StackScript as you’re going (they only run when you first spin up the Linode afresh), you have to update it and hope those steps work in future. With Ansible, the idea is that everything is idempotent, so you can run everything as many times as you want and it won’t change if something has been configured already, so it enables you to easily test out parts of the playbook updates without needing to wipe the whole damn thing. It’s taken a bit over a month of working on it after dinner and on weekends, but now the whole Linode setup is fully automated as well.

However, the other wrinkle is that where the Raspberry Pis don’t store any data on them and can just be wiped without problem, my Linode hosts this blog, my website and all its images, all the various images I’ve posted to Ars Technica over the years, and a bunch of other things too. I ended up splitting the Ansible process into two, there’s the configuration and then another separate playbook that copies everything over from the old Linode onto the new one and then registers a new SSL certificate with Let’s Encrypt and updates Cloudflare to point the DNS of my website and blog and the general server hostname to the new Linode.

That bit was a bit nerve-wracking, I tested the process a bunch of times and pulled the trigger for real last weekend, then had a minor panic attack when I realised that the database dump of my website hadn’t been reimported since I originally tested the process back on the 9th (I suspect it didn’t import because there was already data in the database, but unfortunately it didn’t actually error out so I didn’t know), so I didn’t have any of the posts I’d made nor any of the temperature data since then. 😬 Fortunately I realised this the morning after I’d done the migration and had wisely left the old Linode up and running, so I renamed the database on the new Linode so I could harvest the temperature data that been sent since the migration, dumped the old database from the old Linode and imported it into the correct location on the new Linode, and then exported and reimported just the missing temperature data, and we’re back in business.

This time I should be able to revisit this in three or four years when I next do a big upgrade and it should actually be quite painless (famous last words, I know, but I’m much more confident this time).